- Tetras Large Model

-

Core Competencies

Core Competencies

-

Intelligent Image Solutions Integrating AI Sensor, AI ISP, and AI Algorithm

-

-

-

-

Products & Services

Products & Services

-

TetrasMobile AI Sensor Hardware Solution

-

TetrasMobile Intelligent Image SDK Solution

-

TetrasMobile AI ISP Chip

-

TetrasMobile FaceID

-

TetrasMobileSmart Video

-

TetrasMobile Basic Perception Product

-

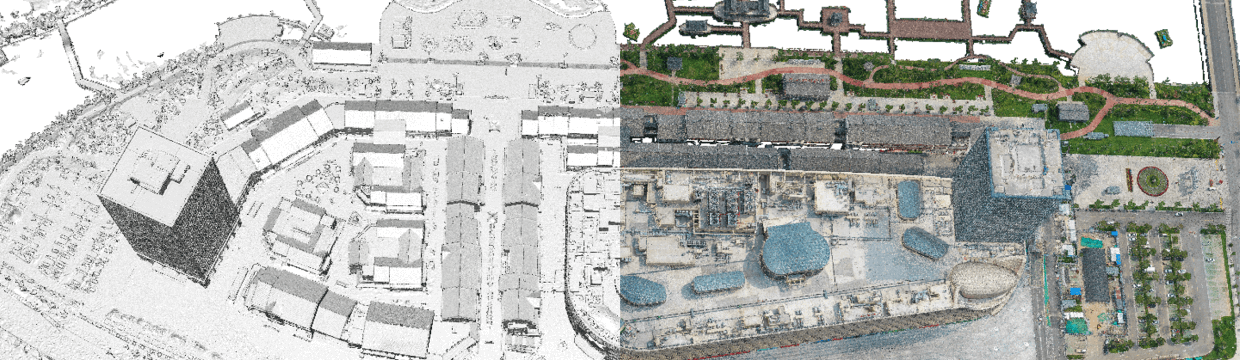

TetrasMobile 3D Vision

-

TetrasMobile Augmented Reality Platform

-

- News & Events

- Contact Us